Robust Visual Tracking using Multi-Frame Multi-Feature Joint Modeling

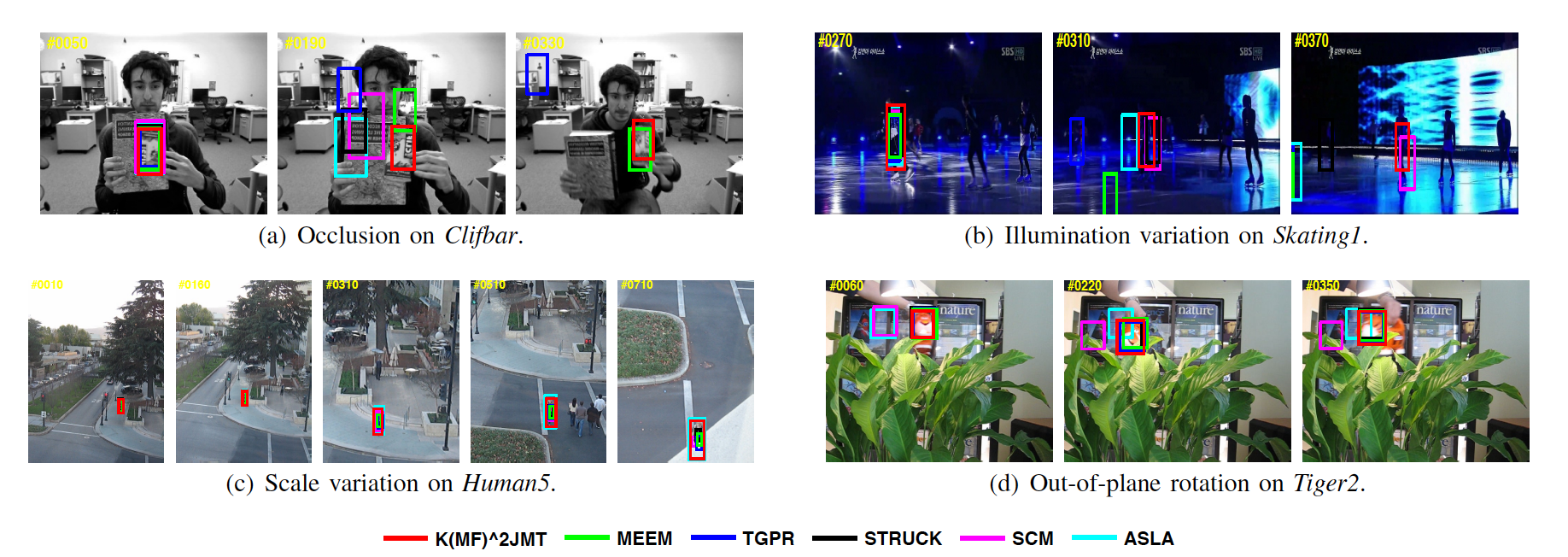

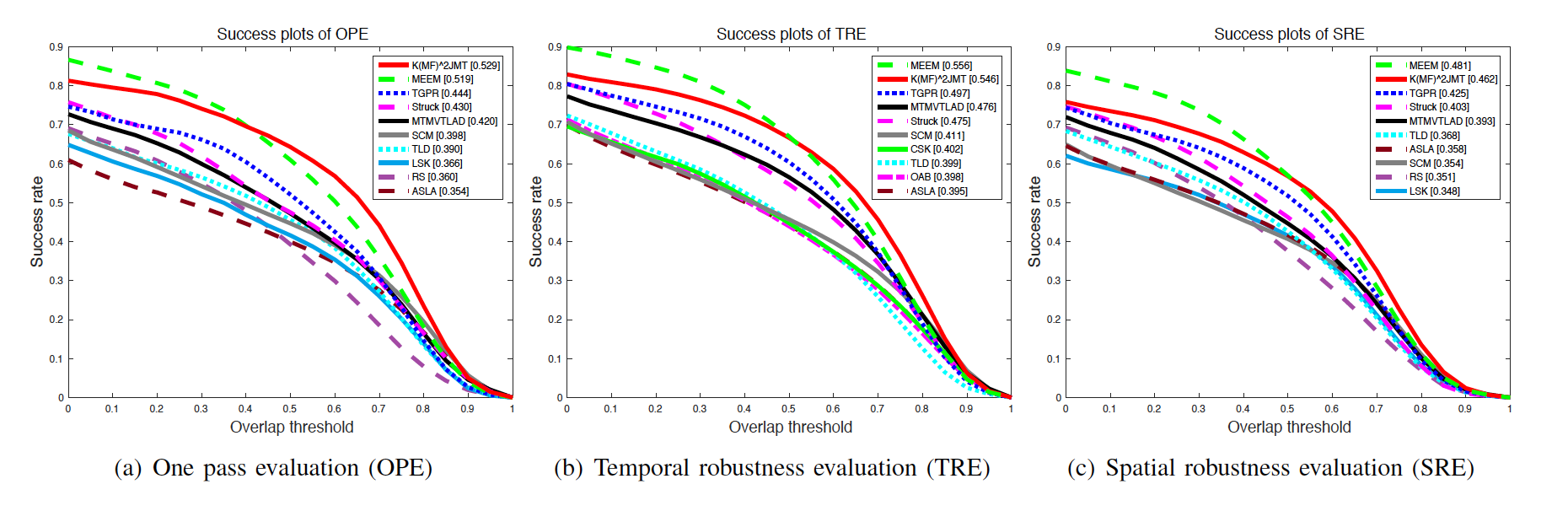

It remains a huge challenge to design effective and efficient trackers under complex scenarios, including occlusions, illumination changes and pose variations. To cope with this problem, a promising solution is to integrate the temporal consistency across consecutive frames and multiple feature cues in a unified model. Motivated by this idea, we propose a novel correlation filter-based tracker in this work, in which the temporal relatedness is reconciled under a multi-task learning framework and the multiple feature cues are modeled using a multi-view learning approach. We demonstrate the resulting regression model can be efficiently learned by exploiting the structure of blockwise diagonal matrix. A fast blockwise diagonal matrix inversion algorithm is developed thereafter for efficient online tracking. Meanwhile, we incorporate an adaptive scale estimation mechanism to strengthen the stability of scale variation tracking. We implement our tracker using two types of features and test it on two benchmark datasets. Experimental results demonstrate the superiority of our proposed approach when compared with other state-of-the-art trackers.

Index Terms—Visual Tracking, Multi-task Learning, Multiview Learning, Blockwise Diagonal Matrix, Correlation Filters.

Download

Supplementary (Complete Experimental Results)

Precomputed Results on OTB-2015 dataset

Users of our code are asked to cite the following publication:

xxxxxxxxxx@article{zhang2018kmf2jmt,title = {Robust Visual Tracking Using Multi-Frame Multi-Feature Joint Modeling},author = {Zhang, Peng and Yu, Shujian and Xu, Jiamiao and You, Xinge and Jiang, Xiubao and Jing, Xiao-Yuan and Tao, Dacheng},journal = {IEEE Transactions on Circuits and Systems for Video Technology},year = {2018},doi = {10.1109/TCSVT.2018.2882339},}

DEMO

[ClifBar](11M)

|

[Tiger2](13M)

|

[Skating1](19M)

|

[Human5](23M)

|